I’m currently working on a game development project, and I need a way to automatically build and deploy it. As the Tekton expert and pipeline guru on my team at Ford Motor Company, I figured I’d set up a Tekton CI/CD pipeline for the game on my own hardware.

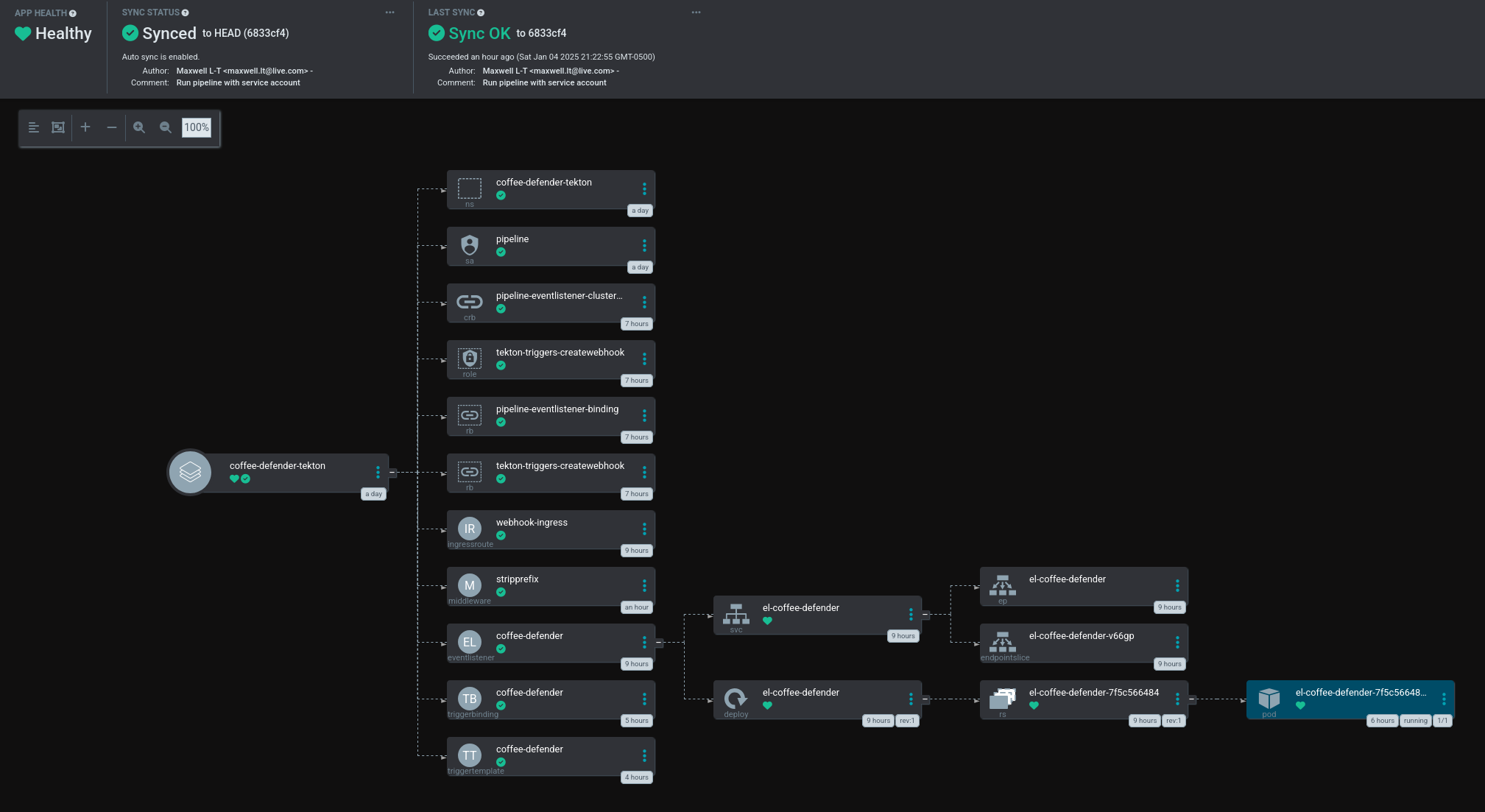

Since it runs on Kubernetes, getting Tekton running on bare metal is a bit of a challenge. I previously set up a K3s cluster and configured ArgoCD to declaratively manage it, so this post will walk through the process of assembling a Tekton pipeline on that stack.

Installing the Tekton Operator #

Tekton consists of a number of different components, such as the core Pipeline functionality, Triggers, and the Dashboard. Tekton provides a way to install one component that manages all the others, which is the Tekton Operator. However, documentation on installing the Operator with ArgoCD appears to be non-existent.

The Tekton Operator installation instructions says to kubectl apply a 1000+ line multi-document YAML file from a cloud bucket. Instead, I created an ArgoCD Application, and included a downloaded copy of that file. In the future, I should be able to update Tekton by swapping out the contents of that YAML file with the latest version of https://storage.googleapis.com/tekton-releases/operator/latest/release.yaml. Alternatively, I could have configured ArgoCD to track the latest release’s URL, at the cost of losing control over the timing of upgrades.

Also managed by the ArgoCD Application is a TektonConfig object. This controls which parts of Tekton are installed, and manages cluster-wide configuration options.

apiVersion: operator.tekton.dev/v1alpha1

kind: TektonConfig

metadata:

name: config

namespace: tekton-operator

spec:

targetNamespace: tekton-pipelines

profile: all

pipeline:

git-resolver-config:

server-url: https://git.maxwell-lt.dev

Here I set the installation profile to install all the Tekton components. I also set the default Git server URL for runtime remote resolution of Pipeline and Task resources.

With that added to ArgoCD, all the Tekton CRDs are installed and ready to use!

Setting up the namespace #

The following Kubernetes objects are placed in a Git repository tracked by ArgoCD.

To run a Tekton pipeline, you need a Kubernetes namespace to hold all of the infrastructure and maintain isolation. First I created the Namespace itself.

apiVersion: v1

kind: Namespace

metadata:

name: coffee-defender-tekton

Next, I added a ServiceAccount that the pipeline will run with.

apiVersion: v1

kind: ServiceAccount

metadata:

name: pipeline

secrets:

- name: git-robot-basic-auth

This associated Secret was created manually, as I haven’t yet set up ArgoCD with any external secrets management systems. I created a dedicated user account on my Forgejo instance (a self-hosted GitHub alternative), and used its login credentials in this Secret.

apiVersion: v1

kind: Secret

metadata:

name: git-robot-basic-auth

annotations:

tekton.dev/git-0: 'https://git.maxwell-lt.dev'

type: kubernetes.io/basic-auth

stringData:

username: <redacted>

password: <redacted>

The ServiceAccount is linked to this Secret, so Tekton will inject these credentials into the git-clone step automatically. The tekton.dev/git-0 annotation is what enables Tekton to automatically use this Secret when cloning code from the specified host, which eliminates the need to explicitly specify those credentials. More information about this can be viewed in the Tekton documentation.

Interlude: Smoke Testing #

Before writing a pile of YAML to spin up EventListeners, TriggerBindings, TriggerTemplates, and IngressRoutes, this is a good opportunity to run a basic pipeline and validate that the basics are in place.

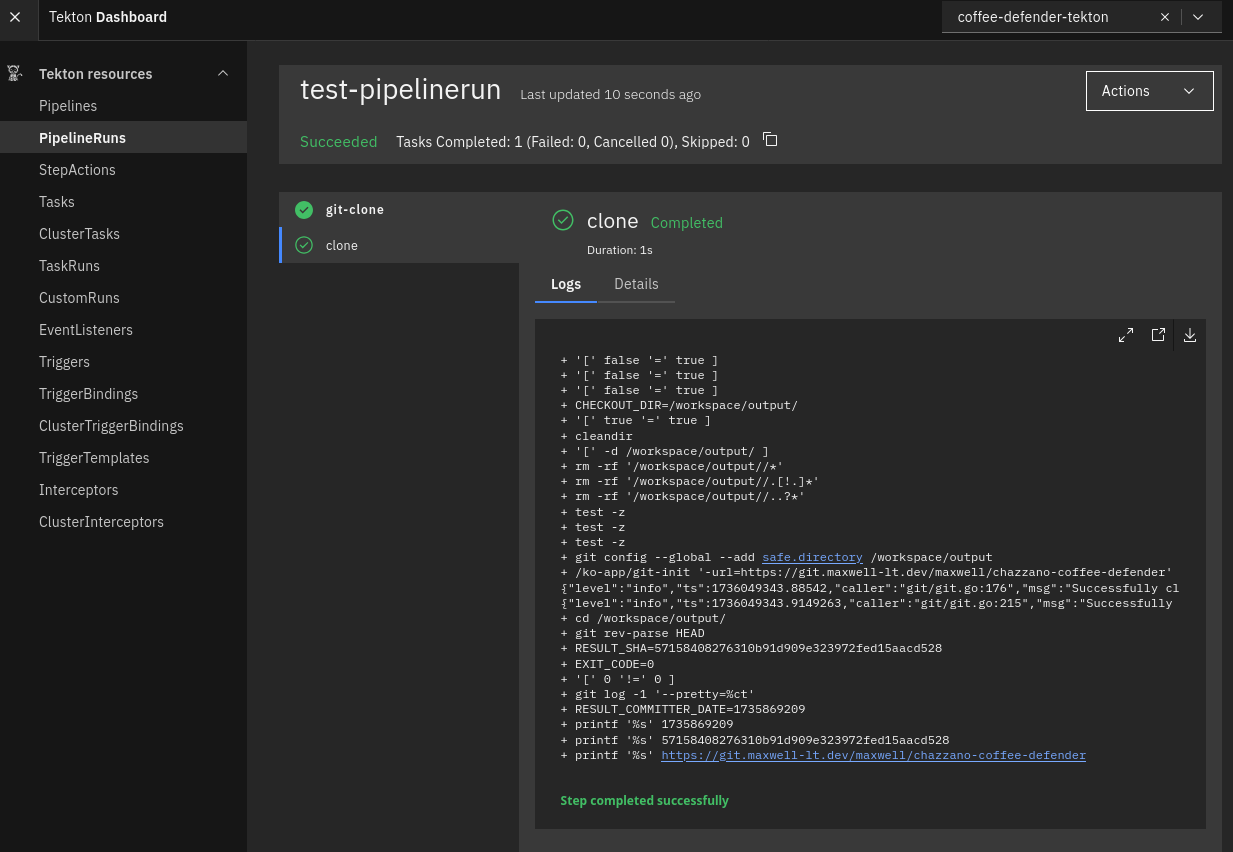

This minimal PipelineRun will, if everything is working correctly, clone the game repository using the Git HTTP credentials attached to the pipeline ServiceAccount.

apiVersion: tekton.dev/v1beta1

kind: PipelineRun

metadata:

name: test-pipelinerun

spec:

serviceAccountName: pipeline

pipelineSpec:

workspaces:

- name: shared

tasks:

- name: git-clone

taskRef:

resolver: hub

params:

- name: catalog

value: tekton-catalog-tasks

- name: type

value: artifact

- name: kind

value: task

- name: name

value: git-clone

- name: version

value: '0.9'

params:

- name: url

value: 'https://git.maxwell-lt.dev/maxwell/chazzano-coffee-defender'

workspaces:

- name: output

workspace: shared

podTemplate:

securityContext:

fsGroup: 65532

workspaces:

- name: shared

volumeClaimTemplate:

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

To test it out, I used kubectl create to instantiate it in the cluster, and confirmed that it worked.

If you’re interested in what each line of the PipelineRun definition does, expand the section below:

Detailed explanation of the test PipelineRun definition

There’s a lot going on in this minimal PipelineRun, so I’ll break down what it’s actually doing.

apiVersion: tekton.dev/v1beta1

kind: PipelineRun

metadata:

name: test-pipelinerun

This specifies that the described object is a Tekton PipelineRun, and sets its name.

spec:

serviceAccountName: pipeline

Earlier, I created a ServiceAccount named pipeline, and bound it to a Secret annotated for use with my Forgejo instance. To use that value, the pipeline must be run by that ServiceAccount.

spec:

workspaces:

- name: shared

volumeClaimTemplate:

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

If you need to pass files between Tasks in a Pipeline, a Workspace must be created by the PipelineRun, bound to a PersistentVolumeClaim. Here I’m using a volumeClaimTemplate, which creates a fresh PVC on the fly which will be cleaned up once the PipelineRun is deleted. The mode of this PVC is set to ReadWriteOnce, which means that all Tasks in the pipeline that use this Workspace must run sequentially. If I wanted to run certain Tasks concurrently, I’d need to change this to ReadWriteMany, but this often comes with a performance impact, depending on what storage classes are available.

spec:

podTemplate:

securityContext:

fsGroup: 65532

The git-clone Task from the Artifact Hub explicitly sets its user ID to the non-root value 65532 for security reasons. To allow this user ID to write files to the volume, the podTemplate has to be overridden to match.

Finally, we get to the Pipeline itself. Normally, you would want to reference an external pipeline from the PipelineRun, but it can also be defined inline, which makes things much easier when running this kind of test.

spec:

pipelineSpec:

workspaces:

- name: shared

First, a Workspace is defined at the Pipeline level. This binds to the Workspace created by the PipelineRun, and the names must match.

spec:

pipelineSpec:

tasks:

- name: git-clone

taskRef:

resolver: hub

params:

- name: catalog

value: tekton-catalog-tasks

- name: type

value: artifact

- name: kind

value: task

- name: name

value: git-clone

- name: version

value: '0.9'

Next, I define a single Task. The name field here can be set to anything, and other Tasks in the Pipeline may reference the task by this provided name. Instead of creating a Task within the namespace which needs to be kept up to date, I use the Hub Resolver to pull it on the fly from the Artifact Hub.

spec:

pipelineSpec:

tasks:

- name: git-clone

params:

- name: url

value: 'https://git.maxwell-lt.dev/maxwell/chazzano-coffee-defender'

The git-clone Task has a lot of available parameters, but only one is required: the repository URL. I pass the URL of my Git repository to the Task as a parameter. It’s a good idea to also provide a branch, tag, or ref for the revision parameter, but if omitted this will pull from the Git repository’s default branch.

spec:

pipelineSpec:

tasks:

- name: git-clone

workspaces:

- name: output

workspace: shared

Finally, the git-clone Task also needs a Workspace to write the cloned files to. The Workspace name expected by the git-clone Task is output, so I pass the shared Workspace into the Task under that name.

Handling Webhooks with Tekton Triggers #

I want to trigger a pipeline every time code is pushed to the code repository. The best way to do this is with a webhook and Tekton Triggers.

The components I will need to create are:

- A webhook in the Forgejo repository pointing at the K3s server.

- A Traefik IngressRoute object that routes traffic to the EventListener’s automatically generated Service.

- An EventListener that handles incoming webhooks.

- A TriggerBinding that takes a JSON payload from the EventListener and pulls out fields into a flat key-value array.

- A TriggerTemplate that takes an array of parameters from the EventListener, and creates a PipelineRun.

- And finally, a Pipeline stored in a Git repository which acts as a template for the PipelineRun, pulled in with remote resolution.

Custom Traefik entrypoint #

There’s one immediate issue I need to solve first, which is how to get the webhook from Forgejo into the K3s server while maintaining scalability. There are two main ways of letting one server handle HTTP traffic meant for multiple services. The first is to assign each service its own port. The problem with that solution is that port numbers only go up to 65535, putting a hard limit on how many different services can listen from a single server. The second and better way is to have a reverse proxy parse the incoming request and route it based on the Host header (and TLS SNI extension) and sometimes the requested path. This is infinitely scalable, as one server could handle requests targetting any number of domain names.

The solution I used is a combination of both of these methods. I created a new Traefik entrypoint with a port dedicated to Tekton Triggers, and I filtered incoming requests by the path prefix with an IngressRoute, so that I can handle incoming webhooks from many EventListeners across different namespaces. In the future, I can create Tekton pipelines and Triggers in separate namespaces, and re-use the same Traefik entrypoint with a different path prefix defined in the IngressRoute.

I am not yet managing core K3s resources with ArgoCD, so to open a new port for Traefik I had to manually edit its config file:

sudoedit /var/lib/rancher/k3s/server/manifests/traefik-config.yaml

apiVersion: helm.cattle.io/v1

kind: HelmChartConfig

metadata:

name: traefik

namespace: kube-system

spec:

valuesContent: |-

ports:

web:

exposedPort: 8080

websecure

exposedPort: 8443

# New port for incoming webhooks

tekton:

exposedPort: 29999

port: 29999

expose:

default: true

protocol: TCP

As of version 27.0.0 of the Traefik Helm chart, the schema for exposing a port with the LoadBalancer service changed from expose: true to expose: { default: true }. This took me a solid hour to figure out, as all of the discussions I could find on the topic occurred before this breaking change.

IngressRoute #

Now that I had a dedicated port available for Tekton webhooks, I added a Traefik IngressRoute to the Tekton namespace’s ArgoCD repository.

apiVersion: traefik.io/v1alpha1

kind: IngressRoute

metadata:

name: webhook-ingress

namespace: coffee-defender-tekton

spec:

entryPoints:

- tekton

routes:

- match: PathPrefix(`/coffee-defender`)

kind: Rule

middlewares:

- name: stripprefix

namespace: coffee-defender-tekton

services:

- name: el-coffee-defender

namespace: coffee-defender-tekton

port: 8080

---

apiVersion: traefik.io/v1alpha1

kind: Middleware

metadata:

name: stripprefix

namespace: coffee-defender-tekton

spec:

stripPrefix:

prefixes:

- '/coffee-defender'

Alongside the IngressRoute, I also added a Traefik middleware to strip out the path prefix, as the EventListener will be expecting requests with no path.

EventListener #

Next, I configured an EventListener which receives traffic from the IngressRoute.

apiVersion: triggers.tekton.dev/v1beta1

kind: EventListener

metadata:

name: coffee-defender

spec:

serviceAccountName: pipeline

triggers:

- name: git-push

interceptors:

- ref:

name: "github"

kind: ClusterInterceptor

apiVersion: triggers.tekton.dev

params:

- name: "secretRef"

value:

secretName: webhook-secret

secretKey: token

- name: "eventTypes"

value: ["push"]

bindings:

- ref: coffee-defender

template:

ref: coffee-defender

This EventListener validates incoming webhook requests, checking if the event was triggered by a push and testing whether its signature is valid for the expected secret value.

Fixing service account permissions for the EventListener #

I let ArgoCD create the EventListener, but the Pod it managed failed to start. Displayed in the logs was the error message User "system:serviceaccount:coffee-defender-tekton:pipeline" cannot list resource "clustertriggerbindings" in API group "triggers.tekton.dev" at the cluster scope.

According to the Tekton Triggers documentation, some additional roles must be granted to the pipeline ServiceAccount.

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: pipeline-eventlistener-binding

subjects:

- kind: ServiceAccount

name: pipeline

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: tekton-triggers-eventlistener-roles

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: pipeline-eventlistener-clusterbinding

subjects:

- kind: ServiceAccount

name: pipeline

namespace: coffee-defender-tekton

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: tekton-triggers-eventlistener-clusterroles

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: tekton-triggers-createwebhook

rules:

- apiGroups:

- ""

resources:

- secrets

verbs:

- get

- list

- create

- update

- delete

- apiGroups:

- triggers.tekton.dev

resources:

- eventlisteners

verbs:

- get

- list

- create

- update

- delete

- apiGroups:

- extensions

- networking.k8s.io

resources:

- ingresses

verbs:

- create

- get

- list

- delete

- update

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: tekton-triggers-createwebhook

subjects:

- kind: ServiceAccount

name: pipeline

namespace: coffee-defender-tekton

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: tekton-triggers-createwebhook

After ArgoCD applied these roles and bindings, the EventListener’s pod successfully started.

Webhook secret #

In the trigger definition of the EventListener, I specified a Secret for the GitHub Interceptor to validate. Forgejo supports the GitHub standard for securing webhooks, where the HMAC signature of the payload is calculated against a secret value, and that signature is placed in the X-Hub-Signature header of the webhook request. On the receiving side, this signature can be validated, which allows the webhook sender to prove they possess the correct secret value without sending it over the network.

I manually created a Secret with the name webhook-secret, with a randomly generated secret value in the token field. If a webhook request is sent to the EventListener without a valid HMAC signature, it will be rejected.

TriggerBinding #

Next is the TriggerBinding. This defines mappings from a structured JSON payload to a flat key-value list. That flat list can then be passed to the TriggerTemplate, and used to instantiate a PipelineRun.

apiVersion: triggers.tekton.dev/v1beta1

kind: TriggerBinding

metadata:

name: coffee-defender

spec:

params:

- name: revision

value: $(body.head_commit.id)

- name: repository-url

value: $(body.repository.html_url)

For this pipeline, the only fields that are needed are the latest commit hash and the repository URL. These are used by the git-clone Task to determine which version of the source code to checkout.

TriggerTemplate #

The TriggerTemplate is the final object in this Tekton Triggers chain, taking in the parameters generated by the TriggerBinding and using it to create a PipelineRun.

apiVersion: triggers.tekton.dev/v1beta1

kind: TriggerTemplate

metadata:

name: coffee-defender

spec:

params:

- name: revision

description: Git commit hash to build

- name: repository-url

description: URL of the Git repository

resourcetemplates:

- apiVersion: tekton.dev/v1beta1

kind: PipelineRun

metadata:

generateName: coffee-defender-build-

spec:

serviceAccountName: pipeline

pipelineRef:

resolver: git

params:

- name: repo

value: tekton-catalog

- name: org

value: maxwell

- name: token

value: git-robot-api-token

- name: revision

value: master

- name: scmType

value: gitea

- name: pathInRepo

value: pipeline/coffee-defender-build/0.1/coffee-defender-build.yaml

params:

- name: revision

value: $(tt.params.revision)

- name: repository-url

value: $(tt.params.repository-url)

podTemplate:

securityContext:

fsGroup: 65532

workspaces:

- name: shared

volumeClaimTemplate:

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

Much of the content of this TriggerTemplate should look similar to the PipelineRun showed earlier, such as the creation of a workspace from a volumeClaimTemplate, and overriding the pod template to enable the git-clone Task to write files into the workspace.

At first glance, it may seem like the pipeline parameters are defined twice. A TriggerTemplate itself declares parameters that are then passed from a TriggerBinding, and those can be used within the resourcetemplates section of the TriggerTemplate with the format $(tt.params.param-name). Separately, the Pipeline is passed the same two parameters. There are scenarios where a parameter may be needed only by the TriggerTemplate, such as if that parameter is used to specify a particular pipeline by name or path. In this case, both parameters are only needed by the Pipeline itself, so they are just passed through.

While ArgoCD would allow declaratively managing Pipelines and Tasks in the namespace, a better way to manage those Tekton objects is by pulling them at runtime from a Git repo or Artifact Hub instance. In the test PipelineRun I used earlier in this post, I pulled the git-clone Task at runtime from the Artifact Hub, and in the same way I’m now pulling a custom Pipeline from a Git repository. The specific YAML file holding a Pipeline definition is identified by passing the repository name and organization, as well as the Git revision and file path. Additionally, the scmType parameter is set to gitea to maximize compatibility with Forgejo. The TektonConfig object mentioned earlier sets the default Git server URL to my Forgejo instance, so the serverURL parameter can be omitted here.

Generating a token for Pipeline remote resolution #

The Git repository holding the Pipeline requires authentication, so the pipelineRef section of the TriggerTemplate specifies the name of a Secret holding an API token.

To obtain an API token, I logged in to Forgejo with the generic user account, and used the Applications page in User Settings to generate one. The token needs at least the permission read:repository scope, and must have read access to the repository that stores the Tekton resources. The generated token was then manually placed into a Kubernetes Secret with the name git-robot-api-token.

Pipeline #

Minimal pipeline #

To start, this pipeline will do nothing but pull the source code with Git, matching the functionality of the smoke test PipelineRun.

apiVersion: tekton.dev/v1beta1

kind: Pipeline

metadata:

name: coffee-defender-build

labels:

app.kubernetes.io/version: "0.1"

annotations:

tekton.dev/categories: "Continuous Integration"

tekton.dev/platforms: "linux/amd64"

spec:

params:

- name: revision

description: Git revision

- name: repository-url

description: Git repository URL

workspaces:

- name: shared

tasks:

- name: git-clone

taskRef:

resolver: hub

params:

- name: catalog

value: tekton-catalog-tasks

- name: type

value: artifact

- name: kind

value: task

- name: name

value: git-clone

- name: version

value: '0.9'

params:

- name: url

value: $(params.repository-url)

- name: revision

value: $(params.revision)

workspaces:

- name: output

workspace: shared

This pipeline accepts the revision and repository-url parameters passed into it by the TriggerTemplate, then passes them into the git-clone Task. The labels and annotations provide additional metadata, but are not strictly necessary.

Defining a build Task #

To build the code, I needed to define a custom Task. Since my source code is built with Nix, I wrote a generic Task for building Nix Flake outputs.

apiVersion: tekton.dev/v1

kind: Task

metadata:

name: nix-build

labels:

app.kubernetes.io/version: "0.1"

annotations:

tekton.dev/categories: "Build Tools"

tekton.dev/platforms: "linux/amd64"

spec:

params:

- name: package

type: string

description: Name of Nix flake output to build

workspaces:

- name: source

description: Source code directory

steps:

- name: build

image: nixos/nix

computeResources:

requests:

memory: 4Gi

cpu: 8

limits:

memory: 8Gi

cpu: 16

workingDir: $(workspaces.source.path)

env:

- name: PACKAGE

value: $(params.package)

- name: HOME

value: /tekton/home

script: |

#! /usr/bin/env bash

set -euf -o pipefail

chown -R root:root .

mkdir -p $HOME/.config/nix

echo "experimental-features = nix-command flakes" > $HOME/.config/nix/nix.conf

nix build ".#${PACKAGE}"

This Task accepts one parameter with the name of the Flake output to build, and passes that as an environment variable into the script. While possible, it’s not recommended to interpolate variables within the script directly, as an interpolated parameter could execute arbitrary code.

The workingDir field is set to the mounted path of the Workspace, so the script can immediately build without having to cd into the right directory.

Because the git-clone Task uses a non-root user ID, this Task first takes ownership of the files in the workspace, as otherwise the Nix build would fail. There may be nicer ways to fix the file permissions, but this works for now. The resource limits for this task are relatively large, but running a Rust build through Nix with nothing cached takes a lot of time.

The Task runs on the nixos/nix image from Docker Hub, and uses the nix build command to build a specific output. I placed this Task at the path task/nix-build/0.1/nix-build.yaml in my tekton-catalog repository, which will be referenced by the Pipeline in the next step.

Adding the build Task to the Pipeline #

apiVersion: tekton.dev/v1beta1

kind: Pipeline

metadata:

name: coffee-defender-build

labels:

app.kubernetes.io/version: "0.1"

annotations:

tekton.dev/categories: "Continuous Integration"

tekton.dev/platforms: "linux/amd64"

spec:

params:

- name: revision

description: Git revision

- name: repository-url

description: Git repository URL

workspaces:

- name: shared

tasks:

- name: git-clone

taskRef:

resolver: hub

params:

- name: catalog

value: tekton-catalog-tasks

- name: type

value: artifact

- name: kind

value: task

- name: name

value: git-clone

- name: version

value: '0.9'

params:

- name: url

value: $(params.repository-url)

- name: revision

value: $(params.revision)

workspaces:

- name: output

workspace: shared

- name: build

runAfter:

- git-clone

taskRef:

resolver: git

params:

- name: repo

value: tekton-catalog

- name: org

value: maxwell

- name: token

value: git-robot-api-token

- name: revision

value: master

- name: scmType

value: gitea

- name: pathInRepo

value: task/nix-build/0.1/nix-build.yaml

params:

- name: package

value: 'wasm'

workspaces:

- name: source

workspace: shared

In a Pipeline, the order in which Tasks are defined has no effect. By default, Tekton will attempt to execute all Tasks concurrently (limited by resource quotas), which is rarely desired. To arrange the Tasks into a directed graph, the runAfter field of a Task should be set to an array of Tasks that should run immediately before. Here the build Task arranges itself after the git-clone Task. A deployment Task would similarly specify that it runs after the build Task. Remote resolution is again used to pull the build Task at runtime from my tekton-catalog repository. The name of the Flake output I want this pipeline to build is wasm, so that is passed to the nix-build Task in the package parameter.

Setting up the webhook in Forgejo #

In the Forgejo repository settings, I added a new webhook with the default Forgejo type. The target URL is http://localhost:29999/coffee-defender, which is the matching path that will be sent to the EventListener by the IngressRoute. The HTTP method is set to POST, the content type set to application/json, and the secret set to the same value as I in the webhook-secret Secret. I also set the webhook to only trigger on push events, and only for the master branch. Alternatively, this filtering can be done in the EventListener.

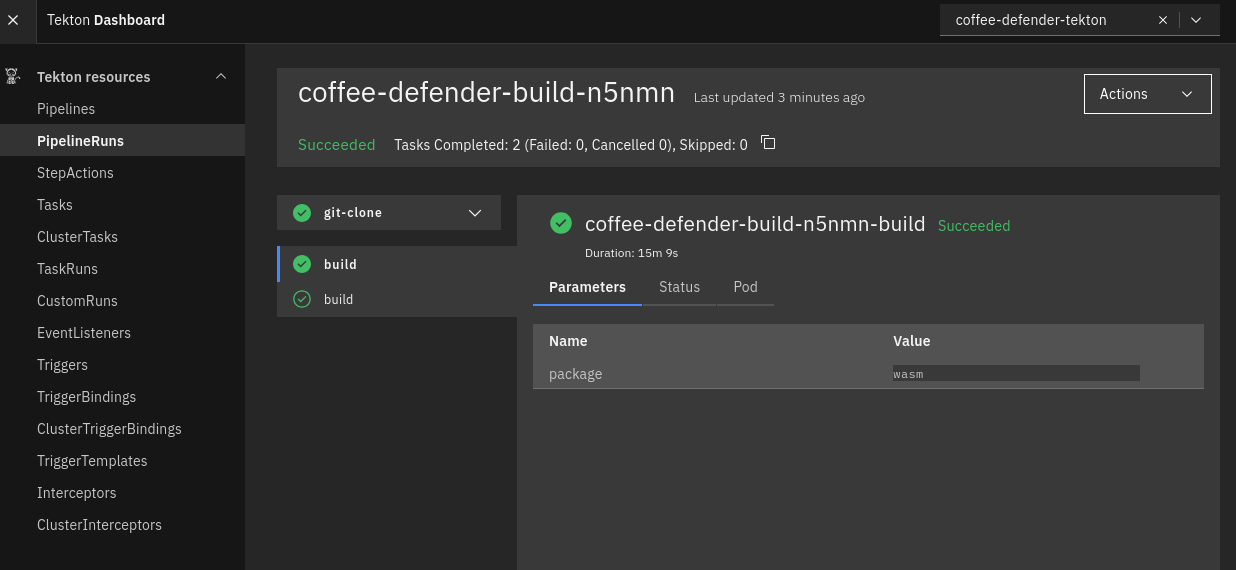

Running the pipeline #

I used the test delivery button for the webhook, triggering the pipeline. After a while of waiting for the build, it finished successfully!

Conclusion #

In this article, I showed how a Tekton pipeline can be run on a self-hosted K3s cluster. We took a trip across the entire stack, from exposing a custom Traefik entrypoint, through Tekton Triggers, and finally executing a custom Tekton pipeline.

At this point, I have a pipeline which can checkout source code and compile it, or in other words, continuous integration without continuous deployment. In a future article, I will enhance this pipeline to deploy the compiled game to Firebase Hosting.